In a simple, straight-forward implementation of a continuous integration pipeline you would typically create a chain of procedures that perform the same set of operations on the supplied input. This provides a build process that is well-defined, repeatable, consistent, and which functions as the foundation for deploying quality software. Production systems for anything substantial require additional complexity and two such accommodation are the ability to accept new or unique inputs and to dynamically adapt to the types of input provided.

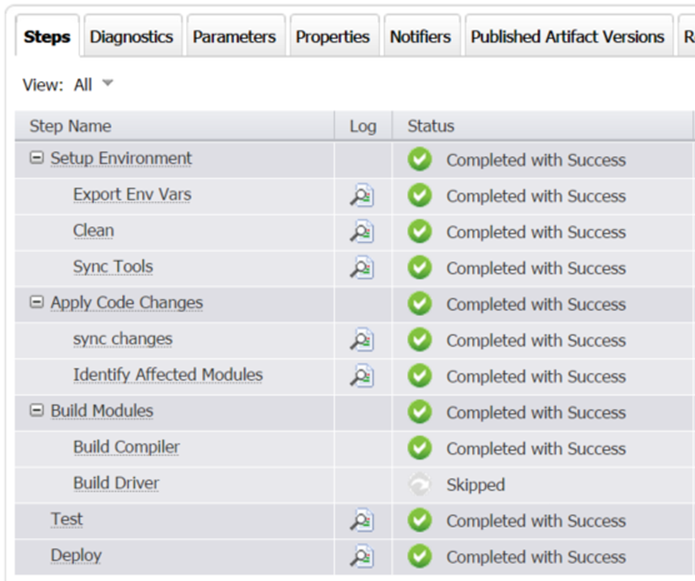

Within ElectricCommander you can achieve a certain level of flexibility through the use of procedure parameters, subprocedures, and run conditions. Suppose, for instance, that you have two modules you need to build as part of your process and you would only like to build either of the modules if they have code changes associated with them. A basic approach would entail creating a procedure for each of the modules, appending them as subprocedures to the larger build process, and setting a run condition on each of them to only execute if a flag is set (see Fig. 1).

Figure 1: Statically defined steps that are either executed or skipped based on flags.

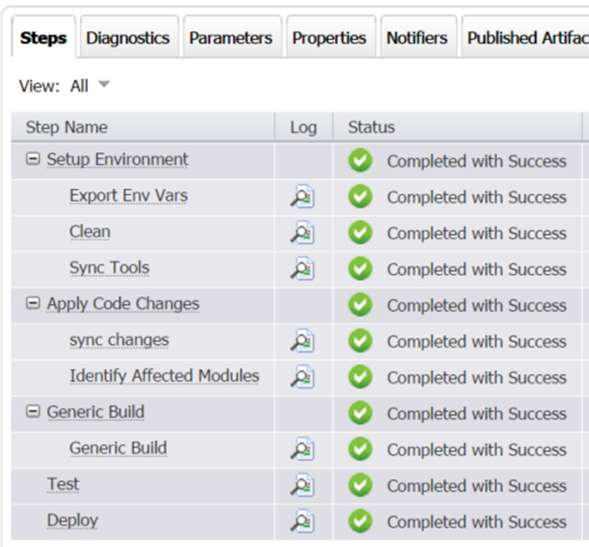

A slightly more advanced method would be to create a single generalized procedure that contains the logic necessary to build the correct thing, given the appropriate parameters. Done well, this kind of procedure could handle modules it has never seen before, making extension painless. The problem with this approach is that now you’ve hidden the process inside a black box and you’ve removed the easy access to running job steps in parallel afforded by Commander (see Fig. 2).

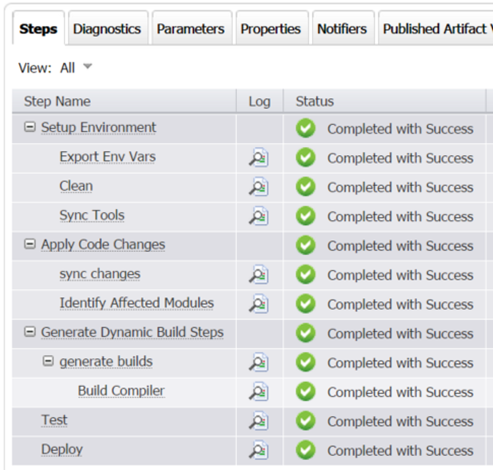

What would be better is a procedure that dynamically appends at runtime only the steps necessary for the current build. This removes the clutter associated with skipping unnecessary procedures while providing flexibility, visibility and access to running activities in parallel. We can do this using the createJobStep API method (see Fig 3).

With createJobStep you have the ability at run time to either define a completely new step or select an existing procedure to append to a given job as a subprocedure. To dynamically create a new step, the bare minimum work required is to call createJobStep and supply the “—command” argument with the code you want executed. Usually it’s best to at least specify the name for the generated step, otherwise it will have a name consisting of a combination of the parent step and the jobstepID number. The good news is that you have complete control over every details of the step you generate through a very thorough set of options, as shown below.

Usage: createJobStep

[--jobStepId <jobStepId>]

[--parentPath <parentPath>]

[--jobStepName <jobStepName>]

[--projectName <projectName>]

[--procedureName <procedureName>]

[--stepName <stepName>]

[--external <0|1|true|false>]

[--status <status>]

[--credential <credName>=<userName> [<credName>=<userName> ...]]

[--description <description>]

[--credentialName <credentialName>]

[--resourceName <resourceName>]

[--command <command>]

[--subprocedure <subprocedure>]

[--subproject <subproject>]

[--workingDirectory <workingDirectory>]

[--timeLimit <timeLimit>]

[--timeLimitUnits <hours|minutes|seconds>]

[--postProcessor <postProcessor>]

[--parallel <0|1|true|false>]

[--logFileName <logFileName>]

[--actualParameter <var1>=<val1> [<var2>=<val2> ...]]

[--exclusive <0|1|true|false>]

[--exclusiveMode <none|job|step|call>]

[--releaseExclusive <0|1|true|false>]

[--releaseMode <none|release|releaseToJob>]

[--alwaysRun <0|1|true|false>]

[--shell <shell>]

[--errorHandling <failProcedure|abortProcedure|abortProcedureNow|abortJob|abortJobNow|ignore>]

[--condition <condition>]

[--broadcast <0|1|true|false>]

[--workspaceName <workspaceName>]

[--precondition <precondition>]

An additional benefit of createJobStep is how it opens the door for defining entire build processes outside Commander in configuration files that are easy for developers to read, modify, and place under version control. Simply define a procedure to read in the configuration file and based on its contents, append the appropriate steps to the running build. Visibility and security become issues to monitor if this style is used. One of the major benefits of Commander is the visibility it provides into the workings of complex builds. You want to avoid the black box situation mentioned earlier because it makes it hard for new eyes to understand what’s happening and makes debugging trickier. This can be achieved by appending multiple smaller steps as opposed to a single monolithic step that performs multiple tasks. In terms of security, it should go without saying that you need to put in the appropriate safeguards if, indeed, you allow just any developer to write a build configuration file to be executed by Commander.

Ultimately, the goal of creating dynamic steps is to declutter and streamline builds to make them more intelligible and efficient. The createJobStep command is a very powerful tool to help achieve this end and like all powerful tools, needs careful usage.

Next Steps:

- Contact SPK and Associates to see how we can help your organization with our ALM, PLM, and Engineering Tools Support services.

- Read our White Papers & Case Studies for examples of how SPK leverages technology to advance engineering and business for our clients.

David Hubbell

Software Engineer

SPK and Associates