What is an SSD?

The term SSD (Solid State Drive), can refer to any drive that uses solid state components to store information, or more to the point – has no moving parts. When we hear terms like ‘Flash Drive’ or ‘Thumb Drive’, we typically don’t think SSD, but technically speaking, they fit the criteria. The modern day SSD however, generally refers to a solid state drive that can be used on a SATA bus. There are other implementations of the modern SSD such as PCIe SSDs which are usually a card installed inside of a desktop computer, or soldered on the motherboard of a computer as in the case of some embedded devices. Generally, when the term SSD is tossed around, the image that comes to mind is a device that more or less resembles a modern hard drive that can be connected in the same fashion, via SATA.

A Brief History

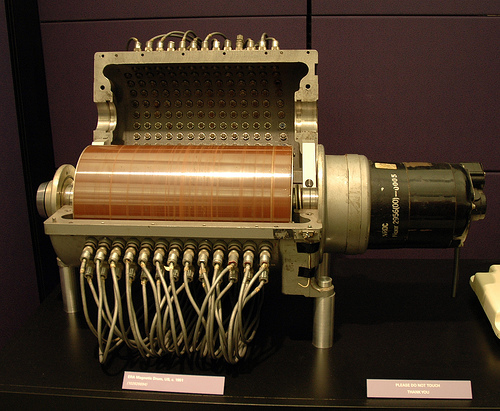

The usage of the SSD can be traced as far back as the 1950’s during the era of the vacuum tube and punch cards, but it was prohibitively expensive and not especially reliable. They were replaced by the cheaper drum storage unit, which is generally regarded as the precursor to the modern hard disk drive. The SSD didn’t see much usage till the 70’s and 80’s when such companies as IBM and Cray began to build the early supercomputers, but the high cost and lack of a ten-year life led to many companies abandoning the technology.

The usage of the SSD can be traced as far back as the 1950’s during the era of the vacuum tube and punch cards, but it was prohibitively expensive and not especially reliable. They were replaced by the cheaper drum storage unit, which is generally regarded as the precursor to the modern hard disk drive. The SSD didn’t see much usage till the 70’s and 80’s when such companies as IBM and Cray began to build the early supercomputers, but the high cost and lack of a ten-year life led to many companies abandoning the technology.

Though not widely used due to the high cost, the technology continued being developed as it was apparent that eventually, with technology being continuously improved, the SSD would eventually be commercially viable, and in the mid 80’s the idea started to see usage again as the cost started to come down. Many of the drives during this time frame were RAM based and required a rechargeable battery to power them as the memory (as is the case with current RAM) couldn’t be maintained without a constant source of power. This technology is still used today because they are significantly faster than normal SSDs, but their volatility reduces their long term storage viability.

In 1995, M-Systems introduced flash-based SSD. It didn’t require a battery but weren’t nearly as fast as RAM based solutions. The tech has since seen usage in a wide variety of markets from cellular phones to military applications because of a considerably higher MTBF (mean time between failures) than previous SSD alternatives. Their ability to withstand significant temperature changes, vibration and impacts made them appealing in particular to the mobile device industry and to the military.

In 1999, a number of announcements were made about flash-based SSDs which brought about new development into the market. In 2007, the modern SSD made its first real commercial debut sporting 320Gb and 100,000 IOPS (input/output operations per second).

EFD & SSD

The term EFD (enterprise flash drive) was first coined by EMC in 2008. The EFD differs from an SSD only in the specifications as they are intended for Enterprise applications such as data centers. The EFD was intended by EMC to signify a higher level of standards, but there are no currently appointed organizations defining these specifications and there is nothing preventing a manufacturer from claiming a SSD is ‘Enterprise Level’.

SSD vs Hard Drives – the debate

The SSD drive has many advantages over the hard drive, but one shouldn’t simply dismiss hard drives as being irrelevant, though marketing buzz may cause this anyway.

Some of the obvious advantages to an SSD are things like the start-up time, low latency, lack of moving parts (and therefore shock and vibration resistance), lack of a fragmentation issue, no magnetic sensitivity, weight, and power consumption. Because of the lack of a moving storage medium, an SSD can benefit hugely from the ability to access any part of the storage area with an extremely low latency that is consistent across the entire drive. As a result, the amount of fragmentation on an SSD is irrelevant. To put this in perspective, a normal hard drive needs periodic de-fragmentation so that the files on the drive are stored as efficiently as possible so that the drive head doesn’t have to work as hard, which would in turn increase performance, but because a hard drive has moving components, even the fastest hard drives have to wait for the disk to spin to get to the data thats been requested. As stated before, an SSD can access all parts of the storage medium with the same latency, making fragmentation irrelevant.

While an SSD doesn’t suffer from fragmentation performance issues, a NAND based SSD cannot be overwritten. What this means is that in order for data to be written to a particular location, the block must first be erased. This particular problem can result in a significant impact on performance and requires the operating system to keep track of blocks of data that aren’t in use. The operating system then uses a command called TRIM to tell the SSD to wipe the blocks.

In a normal hard drive, blocks are flagged by the operating system as ‘not in use’, but aren’t overwritten till they’re needed. The drive isn’t aware that the blocks are in this state, which isn’t an issue for a normal hard drive as the write and rewrite operation is typically the same, but because an SSD must erase the block before it can be written to, a ‘rewrite’ operation on an SSD greatly increases the write time compared to writing to an empty location. This is further complicated by the fact that SSDs store data in what are called ‘pages’ grouped into blocks. Each page is typically 4kb with 128 pages per block. Because of the limitations of an SSD, an erase command can only erase an entire block. If only part of the block needs to be erased, the remainder must be cached before the block can be erased, then written back to the drive with any additional data. This is called write amplification, and can shorten the life of the drive and reduce random write performance.

Drive Recovery

We’ve all experienced or know someone who’s experienced drive failure or data loss. Companies like Drive Savers and Gillware specialize in data recovery from all sorts of hard drive failures. One of the more extreme measures that occasionally gets used in data recovery involves specialized tools that read data from an individual platter and write it to another individual platter in an attempt to reconstruct its contents.

We’ve all experienced or know someone who’s experienced drive failure or data loss. Companies like Drive Savers and Gillware specialize in data recovery from all sorts of hard drive failures. One of the more extreme measures that occasionally gets used in data recovery involves specialized tools that read data from an individual platter and write it to another individual platter in an attempt to reconstruct its contents.

But how does one recover data from an SSD?

Often the data can’t be recovered. There are many off the shelf recovery tools that the average user can use to get data back from a failing hard drive or SSD drive, but those tools typically rely on the ‘flagged’ state of data, meaning data that was stored in a block that is now marked as ‘useable’. Because of the nature of an SSD drive, this method can be much less reliable because of TRIM and Garbage Collection.

As we mentioned earlier in this article, SSD’s need to have blocks erased before data can be written to them. As a result, its beneficial to actually erase those blocks a soon as possible rather than wait till the space is needed since the re-write operation is so costly in terms of performance. These erasures effectively ‘zero’ the deleted blocks, making it extremely difficult to recover lost data. To complicate matters, each manufacturer uses different algorithms to deal with wear leveling as well as performance boosting. That information is generally proprietary and not available to those specializing in drive recovery. That doesn’t necessarily mean that a failed drive means lost data, but its probably not going to be cheap, if its even possible.

So which is better?

There has never been a perfect storage solution, only improvements over older solutions. To say one is better than the other is highly dependent on the application (not to mention your wallet size). Both have risks and both have advantages over the other. Even with write amplification, SSDs tend to outperform normal hard drives, but if you’re looking to use SSDs in a RAID array, you may be in for a challenge as the TRIM command is still not widely implemented in most RAID hardware. If you’re looking to bump your performance on your laptop or desktop without RAID, an SSD won’t disappoint, but the following can’t be emphasized enough: BACKUP! BACKUP! BACKUP!

Recommendations

I personally (as of writing this) use an early 2011 Macbook Pro with 16gb of ram and a 512gb Crucial M4 SSD as the boot drive and have replaced the optical drive with a 750gb 7200rpm hard drive from Seagate. The hard drive serves as storage for photos as well as my boot camp partition (which I really just use for video games). If you’re an Adobe Lightroom fan, you can see a pretty noticeable performance bump by storing the catalog file on your SSD. From power-on to the login screen, my laptop take about 13 seconds. Overall boot time with all the fixings is probably around 30-45 seconds.

This article is by no means exhaustive, and if you’re on the fence about buying an SSD, I recommend spending some time on Wikipedia. If any of this information is incorrect or inaccurate, feel free to post a comment and I’ll update appropriately.